[Jupyter Notebook] Build Your Own Open-source RAG Using LangChain, LLAMA 3 and Chroma

Constructing your local and zero cost RAG pipeline using free, open-source tools

Get a list of personally curated and freely accessible ML, NLP, and computer vision resources for FREE on newsletter sign-up.

Consider sharing this with someone who wants to know more about machine learning.

New here and don’t know where to begin?

Read the Getting Started page to find your way around The Code Compass.

0. What is Bran Stark’s age?

Here, is what you will be able to do by the end of this hands-on tutorial:

Give your favorite LLM (ChatGPT, LLAMA 3, Mixtral, Gemini, Claude, etc.) some documents, ask it a question and see it respond. This will be running locally, using open-source libraries. Zero API and tooling costs.

I was re-watching Game of Thrones and thought wouldn't it be fun to give the LLM scripts from the episode and ask it a question that requires fetching the relevant context and then generating the correct response?

Me: “What is Bran’s age?”

Before, I show you the RAG response, do you know what Bran’s age was in Game of Thrones (Season 1)?

I was not sure what the correct answer was either. The RAG pipeline then responds to my question with some reasoning as well as sources.

RAG output: “According to the script, Bran is 8 years old. Reasoning: Jaime asks Bran “How old are you, boy?” and Bran responds with ‘Eight.’”

And was the LLM correct? I went through the script and indeed, there it was, Jaime, asking him “How old are you, boy?” and he did say “Eight”!

1. Recap: What is RAG?

Last time, we discussed what RAG or retrieval augmented generation is.

Making an LLM perform well on your custom use case can be a costly affair. Pre-training ChatGPT-4 required 10s of TB of data, 100M$, and 100s of cutting-edge GPUs.

TL;DR: RAG overcomes the limitations of LLMs by bringing in external sources of information as relevant context. It does so at a fraction of the cost of pre-training a model from scratch or fine-tuning an existing model.

It works much like a student in an open-book exam. When faced with a question, the student can look up the latest information in textbooks or online resources, ensuring their answer is accurate and up-to-date.

Check out all the details here, with visuals to help you understand the concept of RAG and what it is all about:

2. Building An Open-Source RAG Pipeline From Scratch

Today, we see how you can build your own RAG pipeline.

YES! We will dive into the details and build a RAG pipeline from scratch.

I have made a couple of decisions while deciding on the implementation. To build the RAG pipeline, I have consciously decided to use:

Open-source tools: You won’t need to purchase API keys from OpenAI or other vendors to get this running.

Google Colab: The whole session will use Google Colab, so you can try it out yourself.

The poll from last time worked out quite well. There was a clear interest in seeing what Retrieval Augmented Generation looks like in Python. And here we are!

3. Why Should You Care About RAG?

From last time, we recall RAG helps overcome limitations that come with all LLMs:

Integrate Sources of Information to Generate Accurate and Relevant Responses

Remedy Out-of-Date Pre-Trained Models

Model Grounding and Trustworthy LLM Responses

Enhanced Contextual Understanding

4. Hands-on Jupyter Notebook

It’s time to get your hands dirty!

You can find the Jupyter Notebook with the implementation below. I have added text annotations to help you better understand what is going on. Additionally, I have broken the process of creating a RAG from scratch (using open-source tools) into smaller parts.

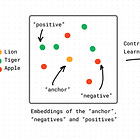

Embeddings lie at the center of RAG. Apple and Netflix have used embeddings to solve similar problems in their products. Similarity on the embedding vectors is used to rank and retrieve the most relevant context based on the query.

!["Attention, Please!": A Visual Guide To The Attention Mechanism [Transformers Series]](https://substackcdn.com/image/fetch/$s_!mFbk!,w_140,h_140,c_fill,f_auto,q_auto:good,fl_progressive:steep,g_auto/https%3A%2F%2Fsubstack-post-media.s3.amazonaws.com%2Fpublic%2Fimages%2F375dc525-fa19-4e6f-81f2-68820bfd36a1_1903x856.png)